I am happy to announce Reddit Founder Steve Huffman and Google’s Matt Cutts are joining my Web 2.0 Summit Session , Defend Web 2.0 From Virtual Blight. They will be joined by Jonathan Hochman , who will discuss strategies Wikipedia uses to address blight and Guru Rajan, who will present a case study about HumanPresent, a new technology from Pramana which offers a less obtrusive (and currently more effective) alternative to Captcha.

Both Steve and Matt spend most of their day in a cat and mouse game against Spammers and others who seek to game their system for personal gain. Wikipedia has hundreds of thousands of contributors with a wide variety of agendas. To keep pace, Wikipedia uses a variety of bots and human editorial strategies. These panelist have a tremendous amount of experiences and will share some powerful strategies to help address blight. I have been talking about Virtual Blight as a construct for understanding and addressing many of the issues facing site operators for over a year now and it is really great to jet a chance to broaden the audience. My pitch for the session is below.

The success stories of Web 2.0 are the so called “Social Web”, sites built on crowd sourcing; user participation, user generated content and user voting/rating systems. Sites such as Youtube, Digg, Yelp and Facebook provide mashups of content sources along with a platform for interaction and participation. Inherent in this model is the assumption that each “user” is an individual who is participating in a community. The reality is that many “users” are avatars, bots and sock puppet created to spread Spam, disinformation, attack individuals, organizations and companies or manipulate rating systems to promote a private agenda that is not in keeping with the spirit and intent of the community.

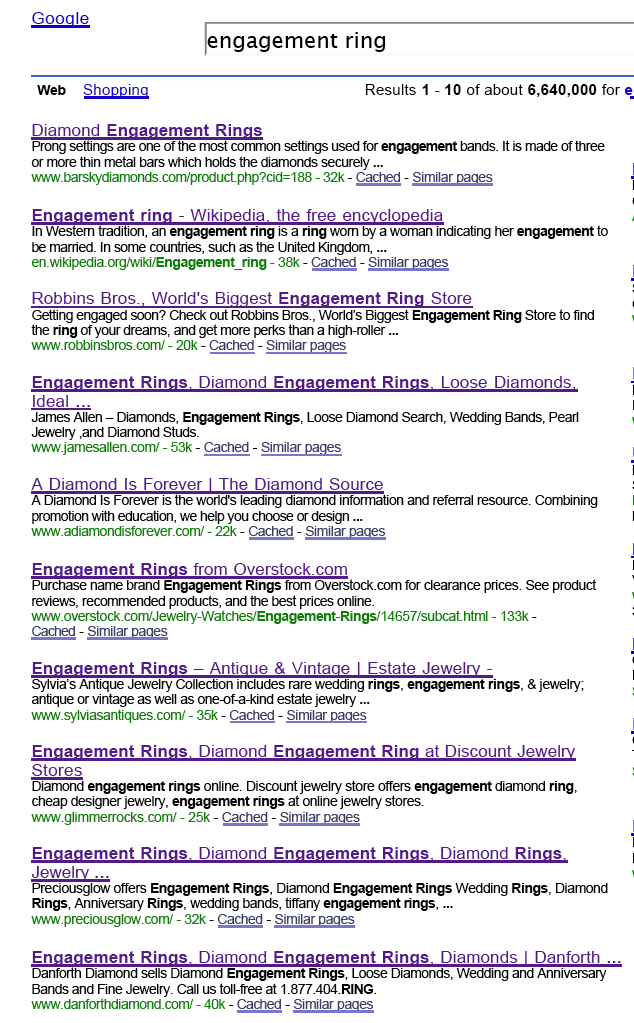

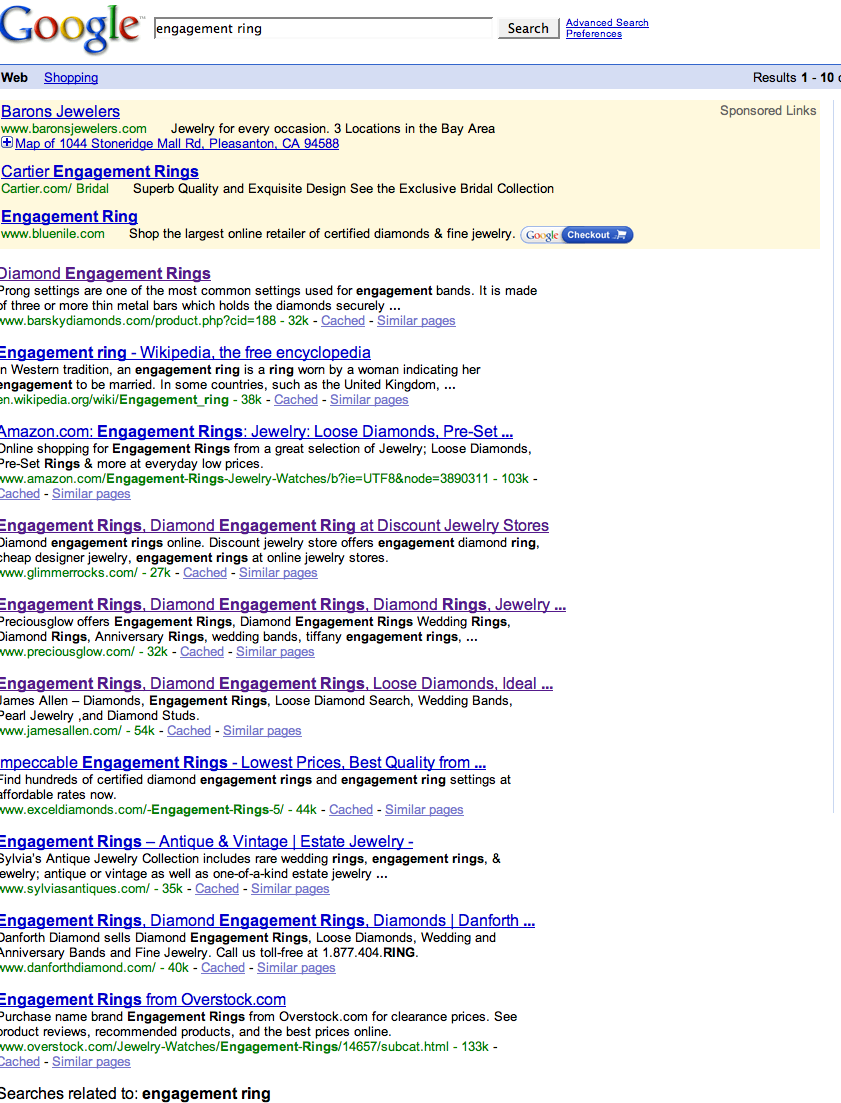

The success of Web 2.0 has made it a prime target for spammers, vandals and hackers who want to exploit the trust implicit in this ecosystem. The May, 2008 headlines about Craiglsist’s ongoing battle with spammers highlights the problem. Web 2.0 companies need to recognize this type of manipulation as a fatal cancer and develop strategies to aggressively defend themselves against the ravages of blight that can devastate their communities.

We are all too familiar with community blight in the physical world: The downward spiral afflicting many of our urban and suburban neighborhoods. Blight is marked by abandoned and foreclosed building, liquor stores and payday lenders on alternating corners, trash strewn lots and front yards, graffiti-covered buildings, broken sidewalks, broken glass and billboards everywhere you look. Prostitutes, drug dealers and scam artist haunt the shadows.

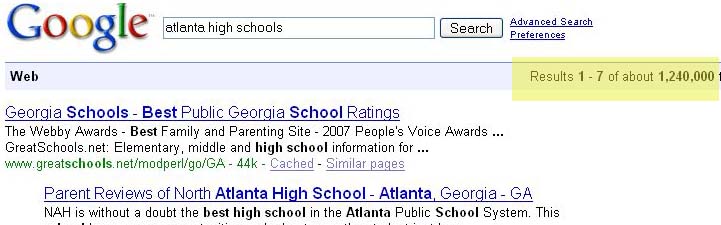

Domains and web properties afflicted with Virtual Blight are like neighborhoods suffering from urban blight. Billboards advertise payday loans, pornography and offshore pharmaceuticals, street corner hustlers offer knock off watches, get rich quick schemes, pirated movies and software along with other products that are suspect and often illegal. Kids aren’t safe to roam around and people move out. Online neighborhoods begin as attractive destinations, but often they turn into vacant, desolate ruins. Hotmail and Geocities are two prime examples of Web neighborhoods that have been impacted by virtual blight, destroying billions of dollars worth of brand equity in the process.